Humans and robots are collaborating on process, quality and cost-efficiency – but it is sensor technology that enables the interaction

Automotive production in the future will see us working with assistants that are modular, versatile and, above all else, mobile. Some fully automated, autonomous solutions are already being used in production sites. Driven by Industry 4.0, this promises highly flexible workflows, maximum system throughput and productivity, as well as economic efficiency. However, ensuring this outcome requires exactly the right safety technology for the application in question.

Automotive production in the future will see us working with assistants that are modular, versatile and, above all else, mobile. Some fully automated, autonomous solutions are already being used in production sites. Driven by Industry 4.0, this promises highly flexible workflows, maximum system throughput and productivity, as well as economic efficiency. However, ensuring this outcome requires exactly the right safety technology for the application in question.

One of the major issues associated with Industry 4.0 is making work processes flexible. At the extreme end of the spectrum, this may involve manufacturing products in batch size 1 under industrial mass-production conditions – that is, manufacturing unique items on a conveyor belt. This type of smart factory – where products and production processes are one with state-of-the-art information and communication technology – is becoming home to machines that are increasingly intelligent, and increasingly autonomous as a result.

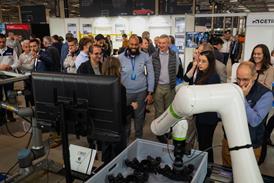

A question of space and timeInteraction between humans and machines is also set to increase in industrial manufacturing. This is because combining the abilities of humans with those of robots results in production solutions that are characterised by optimised work cycles, improved quality, and greater cost-efficiency, to name a few examples.

At the same time, however, machines that are autonomous but primarily interact with humans require new safety concepts that provide effective support for making production processes more flexible. Industry 4.0 is not the first time that industrial automation has focused on interaction between humans and machines. Collaboration between humans and robots is becoming increasingly dominant. This involves both humans and robots sharing the same workspace at the same time.An example of this is a mobile platform with a robot that takes parts from a belt or a pallet and transports them to a workspace, where they are presented and given to the worker stationed there.

In collaborative scenarios such as this, the conventional safe detection solutions used for coexistence or cooperation are no longer sufficient – instead, the forces, speeds, and travel paths of robots now need be to monitored, restricted, and stopped where necessary, depending on the actual level of danger. The distance between humans and robots is therefore becoming a key safety-relevant parameter. Thanks to safety technology, a robot can now be operated without a surrounding safety fence.

Safety laser scanners monitor the precisely defined robot surroundings and are connected to the safety controller of the robot. The easy-to-program protective fields can be adapted to the robot’s individual working range. Countless monitoring scenarios can be set to automation mode at any time. If the safety laser scanner detects that the working range is free, the robot continues work, also reducing downtime.

Safety laser scanners provide not only a protective function, but also support during navigation. This enables the worker to interact directly with the robot, which moves autonomously. No physical guards are required, making this system genuinely true to the spirit of creating sustainable flexibility in production.

The ability of mobile robots to move independently through factory halls and transport goods or workpieces represents the future of production logistics. They share the routes they travel, as well as the shelving areas, with tugger trains and workers. New materials are ordered automatically through ERP systems and distributed among workstations entirely autonomously, practices in keeping with the principles of Industry 4.0.

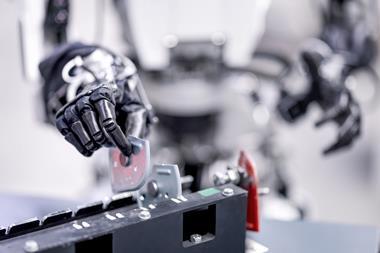

The PLB system was developed by SICK for precise position detection of components in totes and boxes

The PLB system was developed by SICK for precise position detection of components in totes and boxes

The “speed and separation monitoring” type of collaboration is completely in keeping with the concept of highly flexible work scenarios – and therefore with the principles of Industry 4.0 and production processes in smart factories. It is based on the speed and travel paths of the robot being monitoring and adjusted according to the working speed of the operator in the protected collaboration area. Safety distances are permanently monitored and the robot is slowed down, stopped, or diverted when necessary. If the distance between the operator and the machine becomes greater than the minimum distance again, the robot system can continue moving at typical speeds and along typical travel paths automatically. This immediately restores robot productivity.

Functional safety for HRCNo two examples of human-robot collaboration (HRC) are the same. This means that an individual risk assessment for the HRC application is required even if the robot concerned has been developed specifically to interact with humans. “Cobots” like this therefore have many features of an inherently safe construction, starting from their basic design. At the same time, the collaboration space also must meet fundamental requirements such as minimum distances to adjacent areas with crushing or pinching hazards. Safety solutions based on various technologies are becoming more and more intelligent and are constantly making new HRC applications possible because they are able to fulfil requirements that are becoming increasingly demanding.

Mobile systems

The intelligent factory concept envisages individual production elements being networked with one another during production. This requires the data that is captured, evaluated, and transmitted by sensors to be reliable. Maintaining transparency at all stages of the production and logistics chain is crucial for this data. It is not just data networking that is important, however: Mobile systems provide a link between individual stages of work, especially in automated and networked production environments. They represent a new form of mobility – one that also relies on innovative solutions to protect humans and materials.

Components in load carriersSpecifically developed to provide an economical means of handling components using robots, SICK’s PLR is a stand-alone sensor for part localisation and rapid robot integration. It combines the technology for 2D and 3D image acquisition in a solution that can be used reliably under constantly changing ambient conditions. After a short set-up time, existing robot cells and stations can be expanded.

Components in totesThe PLB system was developed by SICK for precise position detection of components in totes and boxes. The CAD-based process of teaching in new components allows new applications to be configured quickly and easily while guaranteeing short cycle times and a high throughput. The system consists of a 3D camera, part localisation software, plus additional tools for simple robot integration and communication with a higher-level control system. The camera delivers 3D images and is immune to artificial ambient light.With tools and functions for synchronising the PLB system with the robot coordinate system, communication with the robot, and collision-free positioning of the robot gripper in relation to the part, the system can be integrated into production.

Robot guidanceSICK developed the URCap Inspector software to enables integration of the PIM60 Inspector 2D vision sensor into a Universal Robots control. The live image from the sensor, calibration and alignment to robot coordinates as well as the setting of grip positions and changing of reference objects are now available in the Universal Robots control unit. A camera-based robot guidance system is created in a few minutes.