In the automotive industry’s relentless quest for efficiency, automation and artificial intelligence (AI) may seem like silver bullets, and panellists at the recent AMS livestream hour agreed that these rapidly improving technologies can indeed bring enormous benefits. However, the road to successful automation is one with a fair few obstacles.

Any system in a modern production plant needs to be completely robust, which means that it can take a while to get set up. According to Matthias Schindler, head of artificial intelligence innovation for BMW production system, setting up an AI system in itself isn’t too complex, and for a basic use case, can be done in a matter of hours. However, laying the groundwork – getting the funding and completing a proof of concept – took years. Getting an AI system to talk to legacy systems can be equally time consuming.

BMW’s first serious foray into AI for production was a system that can recognise whether the correct model designation badge such as 330i, 530e, X5, etc. has been placed on the right car. Schindler says that the project was started in 2018, with the system now being in use at the Dingolfing plant and being rolled out at other locations. “Today it would probably take us some hours to set up the AI, but what is much more important and takes much longer is what we call it system integration,” he said.

Ensuring that the camera is triggered to take photos at the right time, rather than film video, linking the AI’s perception to the order bank and the quality control systems is what takes time. “You have to have a connection and as we have legacy systems, the interfaces can be challenging and pretty time consuming to connect,” he added.

Paula Carsí de la Concepción, manufacturing engineering supervisor and technology specialist in emerging technologies at Ford’s Valencia engine plant, concurred that it is not the neural networks, which are the fundamental mechanisms behind AI, that are time consuming to set up. Instead, in Ford’s case, it was accounting for all the variability and slowly refining the concept.

She oversaw a project that uses microphones to determine whether an electrical connector has been connected properly, based on the sound it makes as the two terminals lock together. The breakthrough for the project was the realisation that ultrasonic microphones would filter out background noise that made normal microphones unusable.

The system has now been running on the line for over three years and has been gathering data for further improvement. The next goal is to make the system wireless, but the vast quantities of data generated by the ultrasonic microphones is proving a challenge, as Bluetooth or WiFi cannot cope. However, there is a proof of concept being trialled on the line right now, she added.

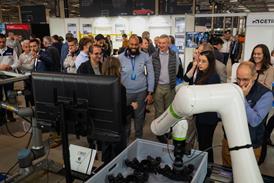

For Symbio, a company developing software to power robotics, the biggest barrier to fast ramp-up is not the core software itself, but having access to the hardware it is supposed to run on, as manufacturer clients can be cagey about releasing before seeing proof that it works, which creates somewhat of a catch-22.

“[The delays are] more around receiving the hardware that we need to show the lab demonstration that our customers demand,” explained head of product marketing Kevin Konkos. “My hope is that as we continue to deliver more applications, they see the value of maybe skipping some of these steps and going straight into production so that we can enable the robot to learn on the job.”

The imitation game

One help in cutting down the ramp-up time, as well as a potential solution to the hardware not being available immediately, is simulation software. BMW uses the same AI and neural networks in its new autonomous transport robots, which can find their own way around the plant without the lines on the floor that are typically required for AGVs. The robots have been such as success that BMW spun off Idealworks to further develop and sell the technology to other companies.

The trouble is that neural networks rely on trial and error for training. While the badge recognition system can be run in the background to learn, errors with transport robots would involve them running into objects or people. “So this is why [Idealworks] have a great simulation environment where they rebuild significant parts of plants or logistical areas to train their logistical AGV. They only test it live, as they can do a lot of training before with synthesised data, which accelerates the entire training process, and which helps them to make the robot safe,” Schindler explained.

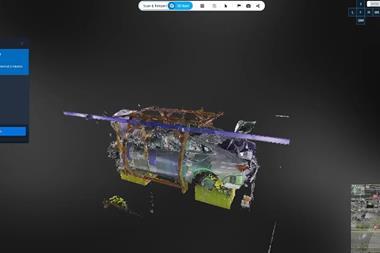

Simulation can be a powerful training tool for AI, but according to Michel Morvan, co-founder and executive chairman of Cosmo Tech, which specialises in production software and digital twins, simulation can provide as close to a crystal ball as one can get in the real world. “What you need is not the data from the past or the present, but what you need is data from the future”, Morvan said.

By combining a digital twin of a plant with AI to extrapolate the data you feed it, it’s possible to enable detailed simulations of the future, and of the effect of potential decisions. “Once you have the simulation ability, you have the possibility to be able to run hundreds of thousands of simulations to find the best way to do something.” He added that this gives real visibility, informing the right decisions to achieve the contradictory goal of cutting costs and becoming more efficient.

Quality over quantity of data

If AI and simulation need to be fed data for their potential to be unlocked, it raises the question of how much data they really need. The answer, it turns out, is not ‘as much as possible’. In fact, as Ford found out, you can have too much of a good thing. De la Concepción said that her team has been working on AI-powered predictive maintenance for over five years and that one of the initial stumbling blocks was that the algorithms didn’t succeed in extracting anything useful out of the massive amounts of data they had been fed.

“You try to step back when you fail and fail and fail, and then you realise that this is not the best way to do it,” she explained. “You have to focus, as much as possible on the problem that you want to show, and give value to production.”

According to Morvan, you can even get away with very little data at all, agreeing that it’s important to stay focused on the problem that needs to be solved. It’s key to get a good ‘return on data’ and have granularity in the right places. He added that even with just course descriptions of each robot, you can run a lot of simulations to determine the ideal order of maintenance operations.

Rather than the quantity of data, quality is usually the problem, reckoned Symbio’s Konkos, explaining: “What we found with most of our customers, is they sort of lacked the fidelity of real-time data we would need to really use their data. So it’s really more about having our robots trained on their own data. … We really focus on enabling the robot to do continuous improvement, where it’s learning from every cycle it’s executing and optimising its parameter set through that.”

The difficulty in getting the right data, rather than just lots of it, is why BMW has focused its efforts with AI on object recognition, like the application which checks whether the right badges have been applied to a car. Neural networks rely on trial and error to learn and with image recognition, incorrect samples can easily be inserted as they are simply a series of pictures.

Schindler explained that predictive maintenance is much more challenging, even if it is an area where AI can really bring efficiency gains: “It is particularly hard if we have unbalanced data. When you look at a regular production which is mature, running on series production; very few incidents actually happen and typically they are very heterogeneous, so you need a very long time of data aggregation.”

Power to the people

No amount of data, simulation or software development can compensate for the people on the shop floor not being on board with the project, however. “If you’re really close to production, you need the support of the people on the line to make this a success,” said de la Concepción. “They have to be [engaged with] the problem, and be part of the solution, so they should be involved from the beginning of the project. This is why we work with proof of concept, and when we get the point that we want to be, then we scale the solution.”

Making sure that such complex new systems work for the people on the shop floor extends to the design. She added that in order for people accept any innovation, it should be complex inside, but simple configure and operate – as well as very robust, as previously discussed.

Morvan concurred, saying: “what is very important today … is having adoption by final users, as Paula said. It has to be simple. It has to be adopted and it has to provide value for the person who is going to use it. Otherwise, it’s not going to be used. It can be the greatest AI, but it’s not going to be used.”

BMW is really taking the idea of employee adoption to heart. It set up an internal communication campaign explaining advantages and requirements of AI and urging employees to come up with ideas about how AI could improve the production process or their own daily lives on the job. Schindler said in our deep dive interview that all the AI applications in use at the moment were developed based on requests from employees.

As it’s not possible for the relatively small AI team to build applications to make every employee’s life easier, BMW developed a self-service programme with a labelling tool that allows employees to set up their own computer vision AI application. The tool is not only available to BMW employees, but can be freely downloaded from BMW’s GitHub page as part of its philosophy of democratising AI.

Watch on demand

The 90-minute livestream can be watched on demand and includes further detail, as well as discussions about practical applications for AI, dealing with product variation, set-up processes and much more.

1 Reader's comment