In modern manufacturing, edge computing is becoming a game changer, enabling faster, more secure, and more efficient operations. Steve Mustard, an expert with the International Society of Automation (ISA), spoke with AMS on how this technology is shifting traditional processes and how it’s set to evolve with artificial intelligence.

Traditionally, manufacturers collected sensor data from equipment, like vibration or RPM, and sent it off-site to be processed, often in distant data centres. While this approach offered insight, it was inefficient and slow. Edge computing changes that dynamic. Instead of transmitting data, the processing now happens right at the source, the sensor itself or very nearby. This allows for real-time data analysis and faster decisions. Importantly, it enhances security by reducing the need to transmit data externally.

“We’ve been doing basic monitoring for many years, analysing very specific things like vibration signatures. What’s different now is the ability to conduct much more detailed analysis directly at the edge, right where data is collected” – Steve Mustard, ISA

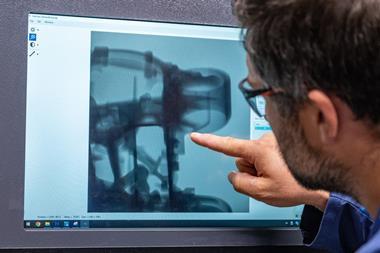

Mustard emphasises that what makes edge computing different isn’t just that data is being collected, that’s been happening for years, but that it’s now being processed locally. The next leap in this evolution is integrating AI and machine learning at the edge. Previously, applying machine learning to operations required powerful, remote servers and wasn’t practical on the factory floor. But today, manufacturers can run machine learning models in real time at the source. For example, machine vision systems can now not only identify defects as items move along the production line but also adjust processes immediately in response. This real-time feedback loop minimises waste, improves quality, and boosts efficiency.

However, getting to that point isn’t automatic. Machine learning models require tuning and iteration. Initially, teams may not know which variables are key to a process. There’s a period of learning and testing before they reach an optimal model. Once in place, though, these systems deliver actionable insights without the noise of irrelevant data, only what’s necessary for smart, immediate decision-making.

Steve Mustard is an independent automation consultant and a subject matter expert of the International Society of Automation (ISA). Backed by more than 30 years of engineering experience, Mustard specialises in real-time embedded equipment and automation systems. He serves as president of National Automation, Inc., and served as the 2021 president of ISA.

Edge technology: Smarter, faster and more scalable

The localisation of data processing is now at core of edge computing but as Mustard points out, the reason this wasn’t widely feasible before comes down to limitations in processing power, software, and the physical size and energy consumption of the technology. “You couldn’t do a lot of this previously. It wasn’t small enough and the power-hungry nature of it meant you couldn’t deploy it,” he says. But now, improvements in sensors and embedded processors, combined with better software, have made real-time, local data analysis possible and practical.

“One of the challenges with any digital transformation project is that it’s hard to define what the objective is…” – Steve Mustard, ISA

This transformation was further accelerated by the rise of generative AI. Although generative AI itself isn’t necessarily being deployed on the factory floor, it has opened minds to what AI in general can do. “Before that, we’d done machine learning for probably 40 or 50 years in control systems but focussing on very specific cases and very well defined. What you’ve got now is the capability to realise things that you never thought you could,” notes Mustard. This includes identifying patterns in vast datasets and surfacing insights humans might miss.

One of the biggest impacts of edge computing combined with AI is scalability and focus. Rather than drowning in data, manufacturers can now zero in on only what they need. “You’re getting specific data so you’re not just adding more machines and getting more and more data that you can’t manage,” Mustard explains. The data becomes usable, targeted, and tied to specific objectives, something he notes is especially beneficial for companies hesitant to dive into full digital transformation projects.

When asked if edge computing is viable for all businesses, including smaller suppliers or those slow to digitise, Mustard is optimistic. “One of the challenges with any digital transformation project is that it’s hard to define what the objective is… But once you see the art of the possible, you can start to see how you could improve things such as quality, responsiveness, throughput and energy costs.”

He emphasises the importance of focus and clear goals, cautioning against projects that chase buzzwords like IoT or big data without a clear problem to solve. Some companies may be ready to experiment, while others might take a conservative approach, choosing a small, specific project before gradually evolving their capabilities. Edge computing allows both paths. It’s no longer a monolithic investment but a modular, scalable approach that fits varied operational needs and budgets.

The hardware side has matured rapidly too. Sensors are not only cheaper but more sophisticated. “Better resolution, better accuracy, better quality data overall,” Mustard notes. These sensors now capture data that was previously too costly or impractical to measure. Combined with advances in software and AI, manufacturers can analyse and act on this data immediately.

Interestingly, Mustard draws a parallel between the shift to edge computing and the evolution of the energy sector. Just as energy generation is moving from large, centralised plants to localised, renewable micro-generation systems, data processing is becoming decentralised and closer to the point of use. This reduces inefficiencies and allows for real-time decision-making right where it matters.

Processing power has also kept pace. The emergence of affordable GPUs has allowed advanced AI models to be used in smaller, more targeted applications. “Now you can use huge banks of GPUs… and then imagine scaling them down to do a specific function in a production line much more easily,” says Mustard. This means even smaller operations can leverage sophisticated analytics without the need for massive infrastructure. It’s not just a technological shift, it’s a strategic one, giving manufacturers of all sizes the ability to innovate at their own pace.

Join the conversation shaping automation’s next chapter

Discover how leading OEMs and suppliers are implementing smarter, automated, competitive and error-free operations into serial production across North American manufacturing at Automotive Manufacturing North America, 22-23 October in Nashville.

Learn from experts driving smarter, safer and more agile production – and see the technologies transforming the factory floor.

Register now to secure your place at Automotive Manufacturing North America

“You can’t just replace your existing control system or manufacturing line with this new technology. You’ve got to do it gradually”– Steve Mustard, ISA

Legacy systems and the real-world path to edge computing

While the promise of edge computing in manufacturing is clear, many companies are still grappling with a persistent hurdle: Legacy IT systems. In tackling this issue, Mustard emphasizes the need for realistic expectations and a gradual, iterative approach.

Legacy systems are deeply embedded in industrial environments, performing critical control and monitoring functions. Replacing them outright isn’t feasible. “This is one of the big hurdles to achieve in this digital transformation,” says Mustard. “You can’t just replace your existing control system or manufacturing line with this new technology. You’ve got to do it gradually.”

This gradual implementation, however, is often hindered by compatibility issues. Many older systems were not designed to communicate with newer technologies or to handle the scale of data modern sensors can produce. As Mustard explains, “Those legacy systems might not have the ability to consume the data. You might not have the technology to communicate across the facility.”

One workaround, increasingly used in edge computing, is to bypass legacy systems and run analysis in parallel. But this isn’t without risk. “Those legacy systems are there to control and monitor those manufacturing processes. And so, you can’t kind of completely bypass them,” warns Mustard. Doing so can lead to inefficiencies or missed opportunities, especially when edge systems can’t directly influence legacy controls, limiting real-time responsiveness.

This can be particularly frustrating when manufacturers aim to use edge analytics to detect and respond to variations in equipment or process conditions, like changing material volumes or environmental factors. If the edge system isn’t integrated into the control loop, the full benefits, such as real-time process adjustments, are compromised. “You’re not benefiting fully from it because you’ve still got this latency because you’ve got to go back into an older system,” notes Mustard.

Despite these limitations, Mustard sees a clear path forward, through iteration. “If you go into this project thinking it’s going to solve all of your problems… then you’re going to be disappointed,” he said. “But if you understand that it’s a step along the way to digitalisation where everything is talking to everything and it’s adjusting everything automatically, then… you will be able to evolve over time.”

He recommends starting with small wins, like deploying sensors to monitor vibration and gradually adding analytics capabilities. Over time, these can evolve into more integrated solutions. “You go one step further… if you try and say I’m going to solve everything at once, you might fail.”

Mustard also warns against lengthy, rigid transformation plans: “Technology is evolving so quickly. I wouldn’t recommend anybody embark on a multi-year project. By the time a new facility or system is operational, it could already be outdated.”

In short, legacy systems remain a significant, but navigable, barrier to edge computing. The key is not to rush, but to adapt and evolve—one step at a time.

“Some vendors haven’t really considered security and so they’ve concentrated more on performance and capabilities” – Steve Mustard, ISA

Balancing security and progress

As edge computing becomes more embedded in manufacturing, questions around cybersecurity and legacy system integration grow louder. When asked if newer, more advanced edge systems offer greater security, Mustard explains, “It could do and it should do, but it doesn’t necessarily.” Processing data on-site rather than sending it externally is inherently more secure, returning to a model where operations aren’t constantly exposed to the internet. But the reality is more nuanced. “Some vendors haven’t really considered security and so they’ve concentrated more on performance and capabilities,” observes Mustard.

Edge computing introduces a double-edged sword: More capability, but also more risk. As Mustard put it, “You’ve now got more information, more valuable information or more sensitive information than you used to have.” Instead of monitoring basic parameters like temperature or pressure, companies are now generating strategic insights, like production volume or operational efficiency, that, if compromised, could be harmful. “You’re doing more and you’re making it more secure potentially, but you’re also potentially exposing yourself to more security risks.”

The discussion turned to legacy systems, which Mustard labels as a clear vulnerability: “Legacy systems… are really the Achilles heel when it comes to security.” These older systems often rely on “security by obscurity”, staying safe simply because they aren’t connected to the internet. But that’s not a sustainable defence strategy. “That’s not a great security posture to have,” warns Mustard. Transitioning to modern architectures not only improves performance and analytics but also allows for stronger, integrated security frameworks.

To help businesses navigate this transition, Mustard offers three key steps for adopting edge computing effectively:

- Start with a clear, narrow objective: “A very narrowly defined use case, very specific use case that you know is achievable, that has clear business benefit,” Mustard advised. Avoid vague, all-encompassing transformation goals. Know what you’re solving.

- Implement in an agile way: Traditional rollout models often fail because they’re too rigid or slow. “You want to do a minimal viable product… and then you build on it in an agile way over time,” he said. Start small, learn fast, and adapt.

- Measure success properly: Many digital projects falter because there’s no baseline for success. “Put in place up front a way of measuring it so that you know what you get,” Mustard emphasised. Even negative results can be valuable if the project is agile enough to pivot quickly.

Mustard stresses the importance of realism. Adopting edge computing isn’t a magic fix. It’s a journey, one that requires clarity, agility, and accountability. When done right, it can deliver serious gains in performance, efficiency, and security, without getting lost in the complexity.

No comments yet